Description: AddShoppers is an ecommerce personalization and merchandising platform that helps online retailers increase conversion rates and grow revenue. It provides features like personalized product recommendations, email marketing and segmentation, A/B testing, and more.

Type: Open Source Test Automation Framework

Founded: 2011

Primary Use: Mobile app testing automation

Supported Platforms: iOS, Android, Windows

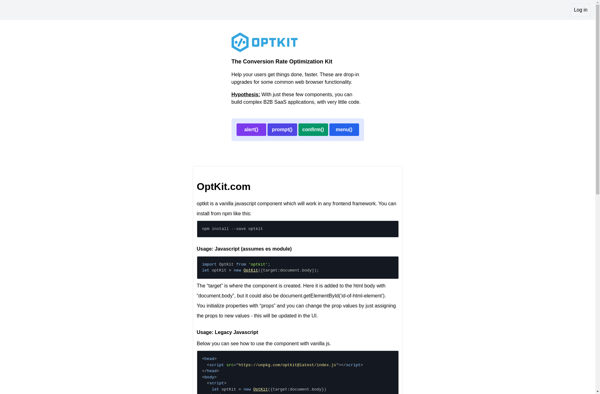

Description: OptKit is an open-source optimization toolkit for machine learning. It provides implementations of various optimization algorithms like gradient descent, ADAM, RMSProp, etc. to help train neural networks more efficiently.

Type: Cloud-based Test Automation Platform

Founded: 2015

Primary Use: Web, mobile, and API testing

Supported Platforms: Web, iOS, Android, API