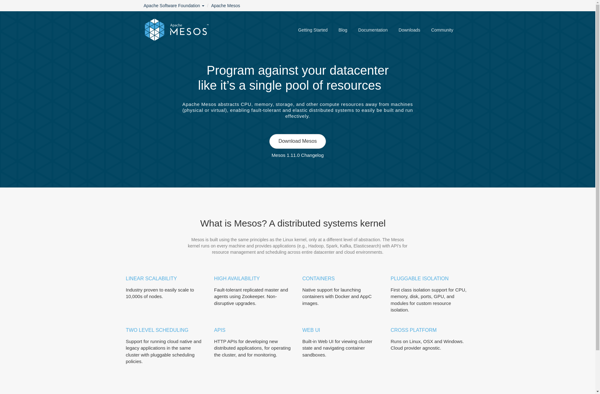

Description: Apache Mesos is an open source cluster manager that provides efficient resource isolation and sharing across distributed applications or frameworks. It sits between the application layer and the operating system on a distributed system, and makes it easier to deploy and manage applications in large-scale clustered environments.

Type: Open Source Test Automation Framework

Founded: 2011

Primary Use: Mobile app testing automation

Supported Platforms: iOS, Android, Windows

Description: GridRepublic is a cloud computing platform that allows users to access on-demand compute power. It enables running high-performance computing workloads in the cloud by aggregating spare computing capacity.

Type: Cloud-based Test Automation Platform

Founded: 2015

Primary Use: Web, mobile, and API testing

Supported Platforms: Web, iOS, Android, API