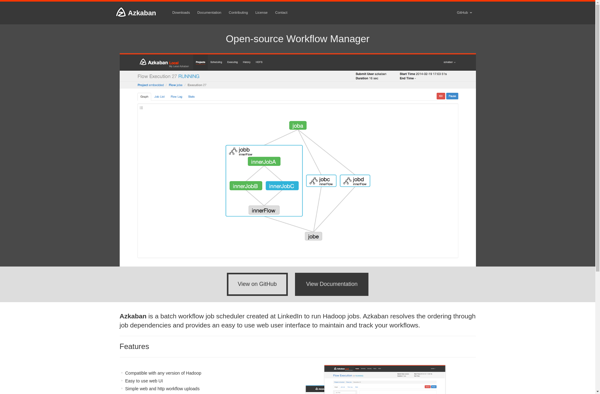

Description: Azkaban is an open source workflow scheduler created at LinkedIn to run Hadoop jobs. It allows users to easily create, schedule and monitor workflows made up of different jobs. Azkaban provides a web interface and scheduling capabilities to manage dependencies between jobs.

Type: Open Source Test Automation Framework

Founded: 2011

Primary Use: Mobile app testing automation

Supported Platforms: iOS, Android, Windows

Description: Apache Oozie is an open source workflow scheduling and coordination system for managing Hadoop jobs. It allows users to define workflows that describe multi-stage Hadoop jobs and then execute those jobs in a dependable, repeatable fashion.

Type: Cloud-based Test Automation Platform

Founded: 2015

Primary Use: Web, mobile, and API testing

Supported Platforms: Web, iOS, Android, API