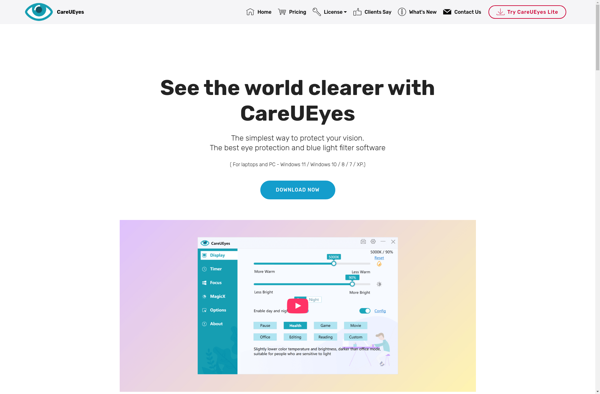

Description: CareUEyes is a digital health platform that enables remote patient monitoring and chronic care management. It allows healthcare providers to monitor patient health data remotely and provides tools for care coordination.

Type: Open Source Test Automation Framework

Founded: 2011

Primary Use: Mobile app testing automation

Supported Platforms: iOS, Android, Windows

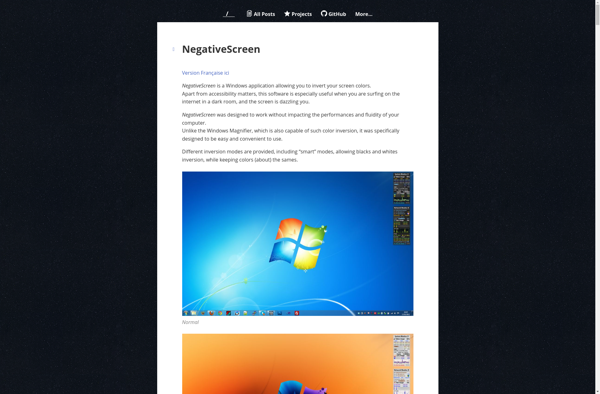

Description: NegativeScreen is a web or desktop-based ai platform that helps organizations reduce bias and toxicity in their products by analyzing text, images, audio and more to detect harmful content which can then be flagged or removed.

Type: Cloud-based Test Automation Platform

Founded: 2015

Primary Use: Web, mobile, and API testing

Supported Platforms: Web, iOS, Android, API