Description: GauGAN2 is an AI-powered painting tool that allows users to turn sketches into photorealistic landscape images. It uses generative adversarial networks to synthesize realistic images from simple inputs.

Type: Open Source Test Automation Framework

Founded: 2011

Primary Use: Mobile app testing automation

Supported Platforms: iOS, Android, Windows

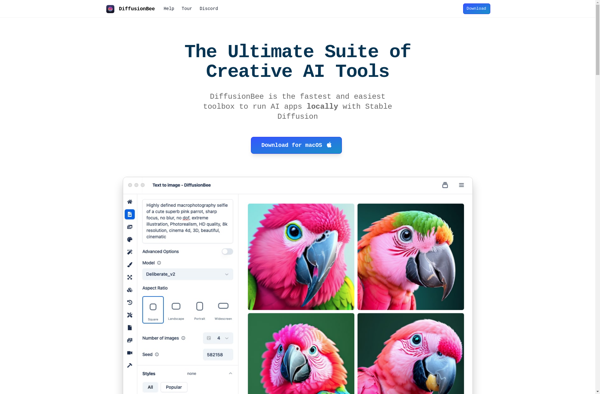

Description: DiffusionBee is an open-source tool for creating text-to-image models using diffused adversarial training. It allows users to fine-tune stable diffusion models on their own datasets and generate high-quality images.

Type: Cloud-based Test Automation Platform

Founded: 2015

Primary Use: Web, mobile, and API testing

Supported Platforms: Web, iOS, Android, API