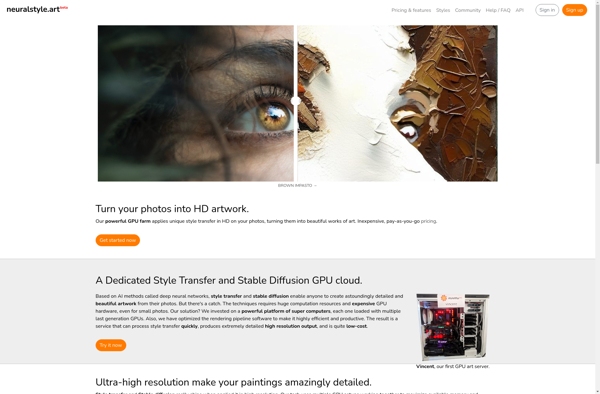

Description: neuralstyle.art is an AI-powered web application that can stylize images and videos into different art styles. It uses neural networks to recreate the artistic style of famous painters and apply it to the user's media.

Type: Open Source Test Automation Framework

Founded: 2011

Primary Use: Mobile app testing automation

Supported Platforms: iOS, Android, Windows

Description: DeepDream is an image synthesis software that uses a convolutional neural network to find and enhance patterns in images, creating a dreamlike hallucinogenic appearance. It was developed by Google engineers Alexander Mordvintsev and Chris Olah in 2015.

Type: Cloud-based Test Automation Platform

Founded: 2015

Primary Use: Web, mobile, and API testing

Supported Platforms: Web, iOS, Android, API