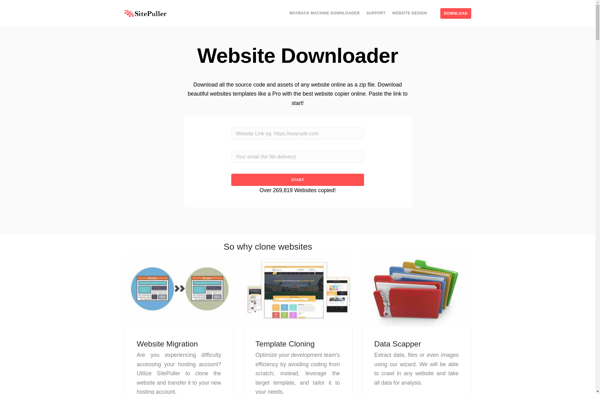

Description: SitePuller is a web crawler and website copier software. It allows users to easily download entire websites for offline browsing, migrate sites, generate site maps, and more. The tool is designed for web developers, digital marketers, researchers, and anyone needing to archive or analyze websites.

Type: Open Source Test Automation Framework

Founded: 2011

Primary Use: Mobile app testing automation

Supported Platforms: iOS, Android, Windows

Description: BlackWidow is an open-source web vulnerability scanner that helps developers and security professionals identify security weaknesses in web applications. It can crawl websites to map out all available pages and endpoints, then perform checks for SQL injection, cross-site scripting, insecure configurations, and other flaws.

Type: Cloud-based Test Automation Platform

Founded: 2015

Primary Use: Web, mobile, and API testing

Supported Platforms: Web, iOS, Android, API