Description: SiteSucker is a Mac application that allows users to download entire websites for offline browsing. It automatically scans sites and downloads web pages, images, CSS, JavaScript, and other files.

Type: Open Source Test Automation Framework

Founded: 2011

Primary Use: Mobile app testing automation

Supported Platforms: iOS, Android, Windows

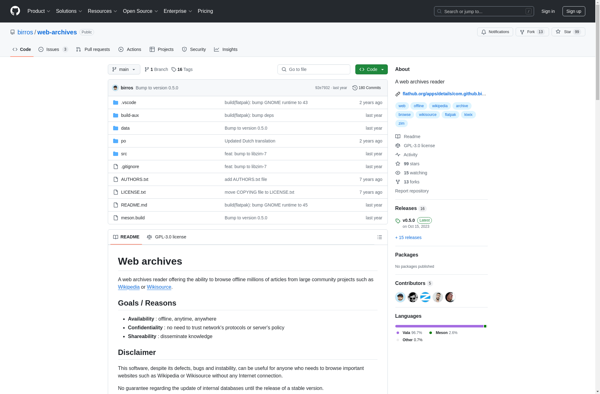

Description: WebArchives is an open-source web archiving software designed to archive websites locally or remotely. It allows scheduling regular captures of sites to preserve their content over time.

Type: Cloud-based Test Automation Platform

Founded: 2015

Primary Use: Web, mobile, and API testing

Supported Platforms: Web, iOS, Android, API