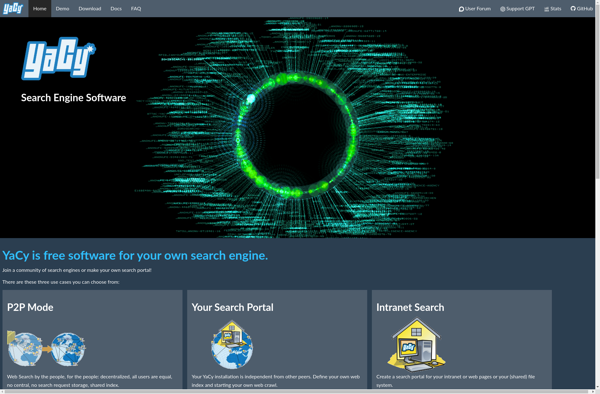

Description: YaCy is an open source, decentralized search engine that allows users to search the web in a private and censorship-resistant way. It forms a peer-to-peer network where each node indexes a portion of the web using a crawling algorithm.

Type: Open Source Test Automation Framework

Founded: 2011

Primary Use: Mobile app testing automation

Supported Platforms: iOS, Android, Windows

Description: Common Crawl is a non-profit organization that crawls the web and makes web crawl data available to the public for free. The data can be used by researchers, developers, and entrepreneurs to build interesting analytics and applications.

Type: Cloud-based Test Automation Platform

Founded: 2015

Primary Use: Web, mobile, and API testing

Supported Platforms: Web, iOS, Android, API