Description: CocoScan is a document scanning and OCR software for Windows, Mac and Linux. It allows scanning paper documents to PDF and performing OCR to make them searchable. Key features include bulk scanning, PDF editing tools, cloud storage integration and automation workflows.

Type: Open Source Test Automation Framework

Founded: 2011

Primary Use: Mobile app testing automation

Supported Platforms: iOS, Android, Windows

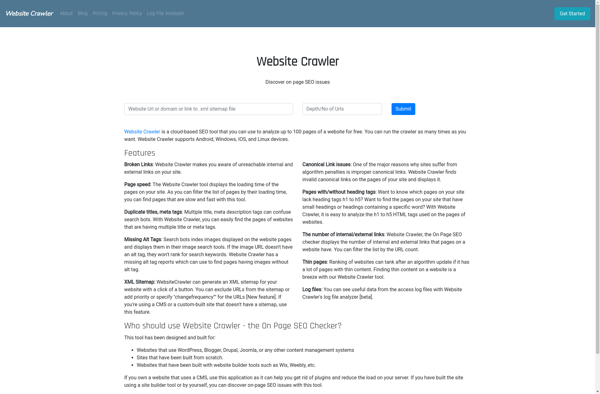

Description: A website crawler is a software program that browses the web in an automated manner. It systematically scans and indexes web pages, following links to crawl through websites. Website crawlers are used by search engines to update their search results.

Type: Cloud-based Test Automation Platform

Founded: 2015

Primary Use: Web, mobile, and API testing

Supported Platforms: Web, iOS, Android, API