Description: CVAT is an open source computer vision annotation tool for labeling images and video. It allows for collaborative annotation of datasets with features like predefined tags, interpolation of bounding boxes across frames, and review/acceptance workflows.

Type: Open Source Test Automation Framework

Founded: 2011

Primary Use: Mobile app testing automation

Supported Platforms: iOS, Android, Windows

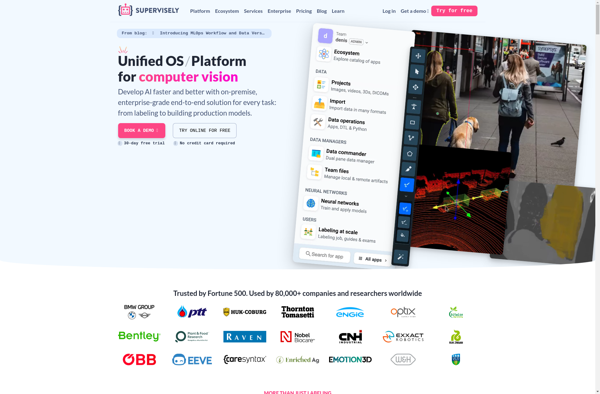

Description: Supervisely is a no-code platform for computer vision and machine learning. It allows users to annotate data, train neural networks, and deploy models without coding. Supervisely streamlines computer vision workflows.

Type: Cloud-based Test Automation Platform

Founded: 2015

Primary Use: Web, mobile, and API testing

Supported Platforms: Web, iOS, Android, API