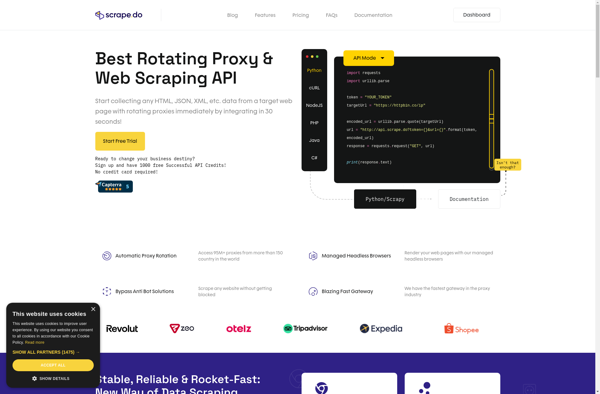

Description: Scrape.do is a web scraping tool that allows you to extract data from websites without coding. It has a visual interface to build scrapers and can scrape text, images, documents, and data tables. Useful for marketing, research, data analysis.

Type: Open Source Test Automation Framework

Founded: 2011

Primary Use: Mobile app testing automation

Supported Platforms: iOS, Android, Windows

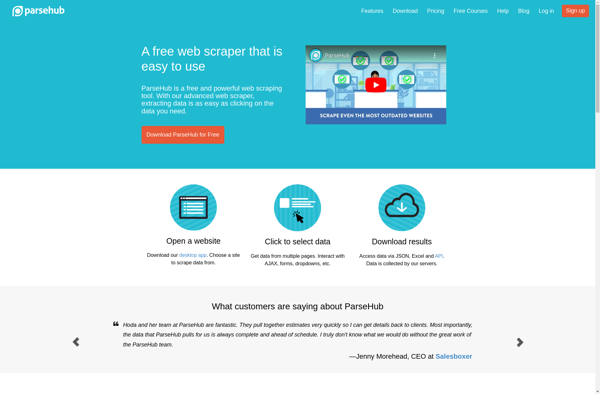

Description: ParseHub is a web scraping tool that allows users to extract data from websites without coding. It has a visual interface to design scrapers and can extract data into spreadsheets, APIs, databases, apps and more.

Type: Cloud-based Test Automation Platform

Founded: 2015

Primary Use: Web, mobile, and API testing

Supported Platforms: Web, iOS, Android, API