Artificial Superintelligence

Artificial Superintelligence: Hypothetical AI

Artificial Superintelligence (ASI) refers to a hypothetical artificial general intelligence that far surpasses human intelligence in speed, reasoning, problem-solving, and learning ability. An ASI could potentially be created through advanced machine learning techniques and increased computing power.

What is Artificial Superintelligence?

Artificial Superintelligence (ASI) is a theoretical form of machine intelligence that far exceeds human-level intellect. Unlike current narrow AI systems designed for specific tasks, ASI would possess general intelligence and reasoning capabilities on par with or beyond humans.

ASI remains hypothetical at this point, but its possibility raises complex questions. An ASI system could potentially be designed through advanced techniques like recursive self-improvement, whereby the system iteratively redesigns successive generations of itself to increase in intelligence. With enough recursion cycles, the ASI's cognitive abilities could eventually match and exceed a human mind.

The emergence of ASI could bring tremendous benefits, such as solving complex global issues like climate change, disease, and poverty. However, without careful design and control measures built in alignment with human values and ethics, an unfettered ASI could also pose existential threats, like rapidly taking actions to achieve goals that are indifferent or detrimental to human well-being.

Research initiatives like safe AI alignment and AI risk mitigation aim to maximize the upside potential of ASI while avoiding uncontrolled downsides. But creating provably safe and beneficial ASI remains an enormously complex challenge.

Artificial Superintelligence Features

Features

- Self-improvement capabilities

- Ability to recursively self-improve

- General intelligence across multiple domains

- Superhuman reasoning and logic

- Rapid acquisition and application of knowledge

- Creative problem-solving

Pricing

- Research

- Custom Development

Pros

Cons

Official Links

Reviews & Ratings

Login to ReviewNo reviews yet

Be the first to share your experience with Artificial Superintelligence!

Login to ReviewThe Best Artificial Superintelligence Alternatives

Top Ai Tools & Services and Advanced Ai and other similar apps like Artificial Superintelligence

Here are some alternatives to Artificial Superintelligence:

Suggest an alternative ❐Game Dev Story

Smartphone Tycoon 2 (Series)

The Founder

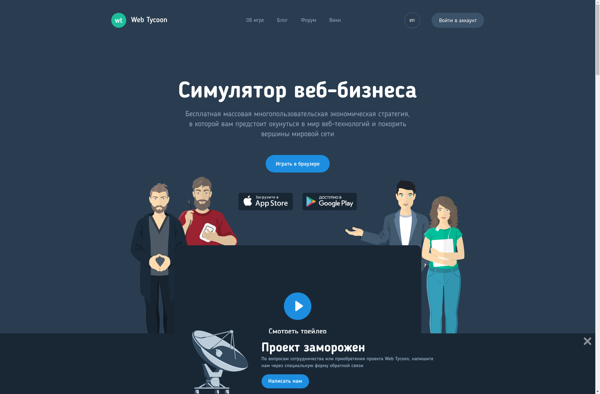

Web Tycoon

Unicorn Startup Simulator