PolitePol

PolitePol: AI-Powered Content Moderation Tool

PolitePol is an AI-powered content moderation tool that helps online communities foster constructive dialogue. It uses natural language processing to detect harmful speech and give real-time suggestions to improve the tone of conversations.

What is PolitePol?

PolitePol is an artificial intelligence-powered content moderation software that helps online platforms and communities foster constructive dialogue and reduce harmful speech. It uses advanced natural language processing and machine learning algorithms to analyze text conversations in real-time and identify comments that are rude, toxic, racist, sexist or otherwise problematic.

When PolitePol detects a harmful comment, it takes several actions automatically to improve the conversation. First, it hides or removes the toxic comment so it is not visible publicly on the platform. Second, it sends a private notification to the commenter suggesting edits or improvements to make their message more constructive while still allowing them to express their viewpoint respectfully. The suggestions are customized based on the type of toxicity detected and aim to educate users.

In addition to automated moderation, PolitePol also provides useful metrics and insights to community managers and moderators to inform policy decisions. Its dashboard tracks toxicity levels across various demographics and conversation topics, identifying hotspots that need more targeted intervention. Community managers can also override PolitePol's suggestions or customize the sensitivity as needed.

By combining AI with human oversight, PolitePol allows online communities to keep conversations productive and minimize moderator fatigue. Initial customers have reported a 15-30% reduction in user complaints and policy violations after deploying PolitePol's real-time content analysis and notification system.

PolitePol Features

Features

- AI-powered content moderation

- Detects harmful speech using NLP

- Gives real-time suggestions to improve conversation tone

- Helps foster constructive dialogue in online communities

Pricing

- Freemium

- Subscription-Based

Pros

Cons

Official Links

Reviews & Ratings

Login to ReviewThe Best PolitePol Alternatives

Top Ai Tools & Services and Content Moderation and other similar apps like PolitePol

Here are some alternatives to PolitePol:

Suggest an alternative ❐Distill Web Monitor

Visualping

RSS.app

HARPA AI

WebSite-Watcher

DELTAFEED

FetchRSS

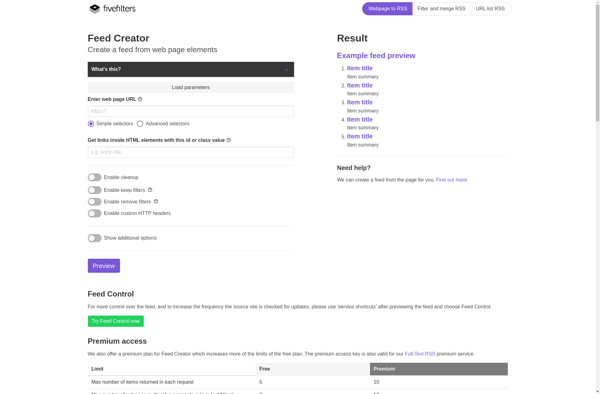

FiveFilters Feed Creator

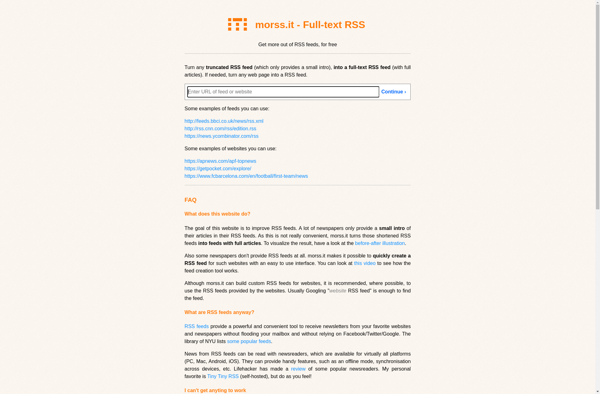

Morss.it

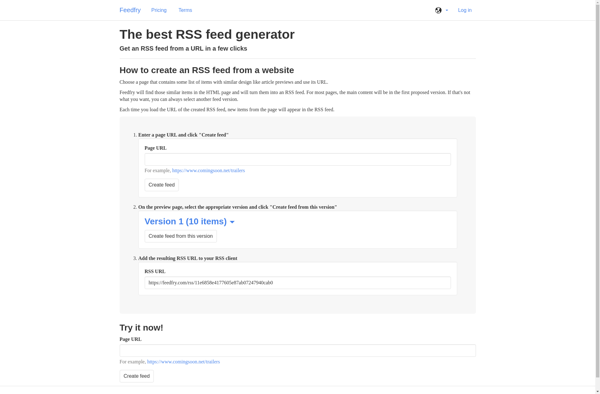

Feedfry

RSSHub

WebRSS