Mollom

Mollom: AI-Powered Content Filtering

Mollom is an AI-powered content filtering and moderation tool used to block spam and offensive content on websites. It analyzes website content in real-time to identify spam, profanity, and other unwanted content before it gets posted.

What is Mollom?

Mollom is a content moderation and spam filtering service that uses artificial intelligence and machine learning to protect websites from spam, profanity, and offensive content. It analyzes all user-generated content submitted to a website in real-time before it is published to identify unwanted content.

Here's how it works: As a user submits content such as blog comments or forum posts, Mollom immediately scans that content to detect spam campaigns, offensive language, and text that doesn't comply with the site's publishing policies. It does this by comparing the submitted content to an extensive database of known spam content and leveraging advanced natural language processing and machine learning algorithms to identify unwanted and harmful material.

If Mollom determines submitted content is legitimate, it is published automatically. If it detects spam, profanity, or policy-violating text, the content can be automatically rejected, flagged for review by a moderator, or replaced by placeholder text. Website owners can customize these rules and filters as needed. Mollom also provides spam and moderation analytics dashboards to monitor trends over time.

Overall, Mollom reduces the manual work required to moderate user-generated content on websites. By automatically detecting and blocking spam and offensive submissions, it allows legitimate content to be published faster while still keeping unwanted content off websites. This helps improve the user experience by preventing malicious links, obscene comments, and other undesirable content.

Mollom Features

Features

- Real-time content analysis

- Spam and offensive content filtering

- Customizable content moderation

- Blacklists and whitelists

- Integrations with major CMS platforms

- Scalable for high-traffic websites

- CAPTCHA alternative

Pricing

- Freemium

- Subscription-Based

Pros

Cons

Official Links

Reviews & Ratings

Login to ReviewThe Best Mollom Alternatives

Top Ai Tools & Services and Content Moderation and other similar apps like Mollom

Here are some alternatives to Mollom:

Suggest an alternative ❐ReCAPTCHA

VisualCaptcha

Mosparo

CleanTalk

HCaptcha

Negative Captcha

GeeTest CAPTCHA

FunCaptcha

UniqPin

Confident Captcha

FeedCaptcha

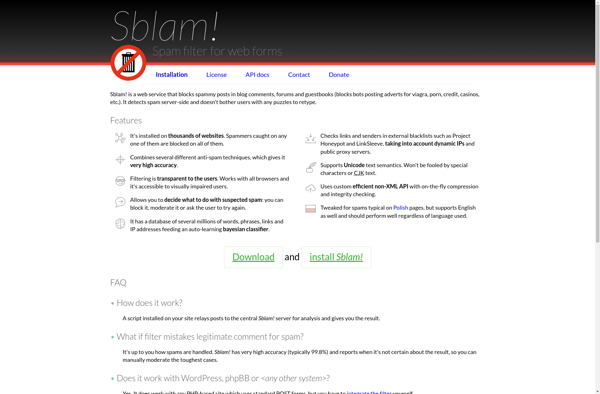

Sblam!

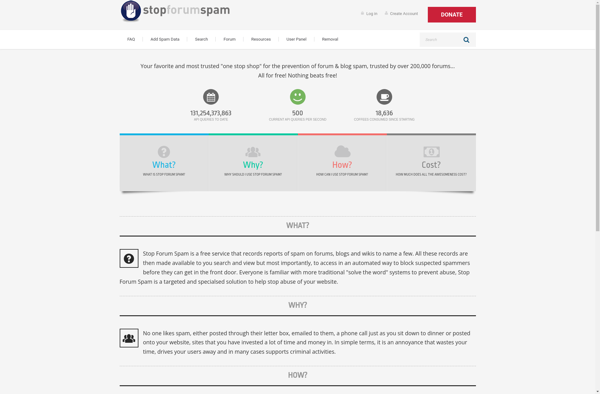

StopForumSpam

Anti-spam

CF7 Honeypot

Keypic

Antispam Bee