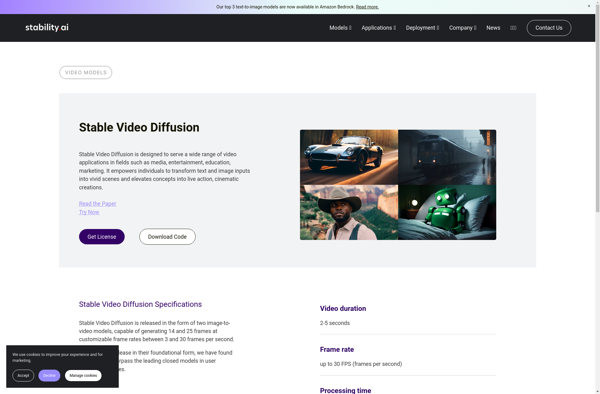

Stable Video Diffusion

Stable Video Diffusion: AI-Powered Video Generation

Discover how Stable Video Diffusion uses AI to generate short videos from text prompts, offering seamless and high-quality video footage with cutting-edge image diffusion models.

What is Stable Video Diffusion?

Stable Video Diffusion is an artificial intelligence system developed by Anthropic that is capable of generating short video clips from text descriptions. It is built on top of image diffusion models like DALL-E 2 and extends the technology to create smooth, coherent video footage rather than just still images.

The system works by taking a text prompt from the user indicating what kind of video they would like AI to generate. For example, the prompt could be something like "A panda bear waving in a forest." Stable Video Diffusion then predicts how that scene would continue to evolve over multiple frames, creating a short HD quality video that matches the description.

Some key capabilities of Stable Video Diffusion include:

- Generating 5-30 second video clips at 720p or 1080p resolution

- Producing videos that are temporally smooth and consistent, without odd jumps between frames

- Supporting a wide range of artistic styles for the generated videos

- Allowing control over attributes like camera movement and framing

Early testing shows significant potential, but there are still some limitations around things like rendering realistic human figures. As the technology continues advancing rapidly, Stable Video Diffusion aims to make visually stunning and physiologically plausible video generation available to a wide audience.

Stable Video Diffusion Features

Features

- Text-to-video generation

- Control over video length, resolution and frame rate

- Ability to guide video generation with keywords

- Generate infinite variations from a single prompt

- Seamless and coherent video generation

- High video quality and stability

- Fast video generation speed

Pricing

- Free

- Open Source

Pros

Cons

Official Links

Reviews & Ratings

Login to ReviewThe Best Stable Video Diffusion Alternatives

Top Ai Tools & Services and Generative Ai and other similar apps like Stable Video Diffusion

Here are some alternatives to Stable Video Diffusion:

Suggest an alternative ❐Runway ML

Pika Labs

Adobe Firefly

Kaiber

D-ID Creative Reality

Vidnoz AI

Wonder Studio

Synthesia.io

LensGo

W.A.L.T Video Diffusion

AI Studios

Creatus

Reemix.co

PixVerse