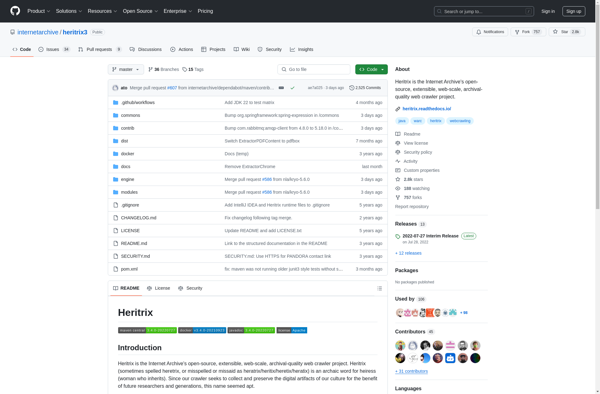

Description: Heritrix is an open-source, extensible, web-scale, archival-quality web crawler project built on the Apache stack. It is designed for archiving periodic captures of content from the web and large intranets.

Type: Open Source Test Automation Framework

Founded: 2011

Primary Use: Mobile app testing automation

Supported Platforms: iOS, Android, Windows

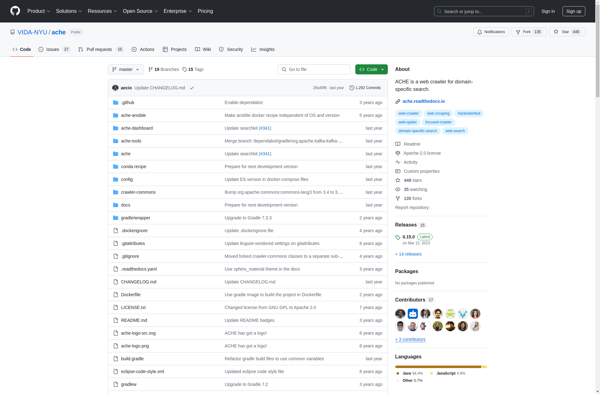

Description: ACHE Crawler is an open-source web crawler written in Java. It is designed to efficiently crawl large websites and collect structured data from them.

Type: Cloud-based Test Automation Platform

Founded: 2015

Primary Use: Web, mobile, and API testing

Supported Platforms: Web, iOS, Android, API