ACHE Crawler

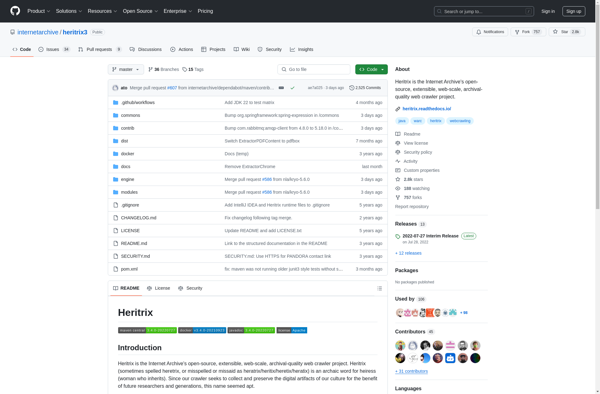

ACHE Crawler is an open-source web crawler written in Java. It is designed to efficiently crawl large websites and collect structured data from them.

ACHE Crawler: Open-Source Web Crawler for Large-Scale Crawling

ACHE Crawler is an open-source web crawler written in Java. It is designed to efficiently crawl large websites and collect structured data from them.

What is ACHE Crawler?

ACHE Crawler is an open-source web crawler written in Java. It provides a framework for building customized crawlers to systematically browse websites and collect useful information from them.

Some key features of ACHE Crawler include:

- Scalable architecture based on distributed computing to crawl large sites quickly

- Flexible plugin system to add customized data extraction and processing logic

- Built-in parsers and tools for common data types like JSON, HTML, text files, etc.

- Support for breadth-first and depth-first crawling strategies

- Integrations with databases, search indexes and data pipelines

- Scheduling and restart capabilities to resume crawls

- Deduplication of pages crawled

- Configurable politeness settings and crawler traps to avoid overloading sites

ACHE Crawler can help build scrapers for various purposes like price monitoring, content aggregation, SEO analysis, research datasets and more. Its plugin architecture makes it convenient for Java developers to customize the crawler's functionality for specific use cases.

ACHE Crawler Features

Features

- Open source web crawler written in Java

- Designed for efficiently crawling large websites

- Collects structured data from websites

- Multi-threaded architecture

- Plugin support for custom data extraction

- Configurable via XML files

- Supports breadth-first and depth-first crawling

- Respects robots.txt directives

Pricing

- Open Source

Pros

Free and open source

High performance and scalability

Extensible via plugins

Easy to configure

Respectful of crawl targets

Cons

Requires Java knowledge to customize

Limited documentation

Not ideal for focused crawling of specific data

No web UI for managing crawls

Official Links

Reviews & Ratings

Login to ReviewThe Best ACHE Crawler Alternatives

Top Development and Web Crawling and other similar apps like ACHE Crawler

Here are some alternatives to ACHE Crawler:

Suggest an alternative ❐Scrapy

Scrapy is a fast, powerful and extensible open source web crawling framework for extracting data from websites, written in Python. Some key features and uses of Scrapy include:Scraping - Extract data from HTML/XML web pages like titles, links, images etc. It can recursively follow links to scrape data from multiple...

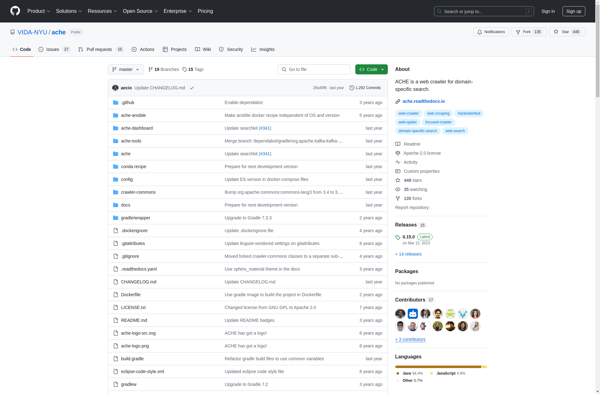

Crawlbase

Crawlbase is a powerful yet easy-to-use website crawler and web scraper. It allows you to efficiently crawl websites and extract targeted data or content into a structured format like CSV files or databases.Some key features of Crawlbase include:Intuitive visual interface for creating, managing and scheduling crawlersSupport for crawl depths, politeness...

Apache Nutch

Apache Nutch is an open source web crawler software project written in Java. It provides a highly extensible, fully featured web crawler engine for building search indexes and archiving web content.Nutch can crawl websites by following links and indexing page content and metadata. It supports flexible customization and pluggable parsing,...

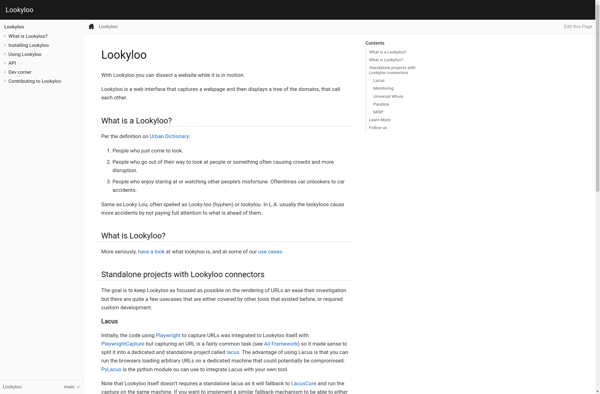

Lookyloo

Lookyloo is an open source web crawling and website analysis platform. It provides an extensible framework for developers and security researchers to build custom scrapers, analyzers, and visualizers to explore and monitor websites.Some key capabilities and features of Lookyloo include:Flexible crawling with support for depth-first, breadth-first, and manual/custom crawling.Plugin architecture...

Mixnode

Mixnode is a privacy-focused web browser developed by Mixnode Technologies Inc. Its main goal is to prevent user tracking and protect personal data when browsing the internet.Some key features of Mixnode include:Blocks online ads and trackers by default to limit data collectionOffers encrypted proxy connections to hide user IP addresses...

StormCrawler

StormCrawler is an open source distributed web crawler that is designed to crawl very large websites quickly by scaling horizontally. It is built on top of Apache Storm, a distributed real-time computation system, which allows StormCrawler to be highly scalable and fault-tolerant.Some key features of StormCrawler include:Horizontal scaling - By...

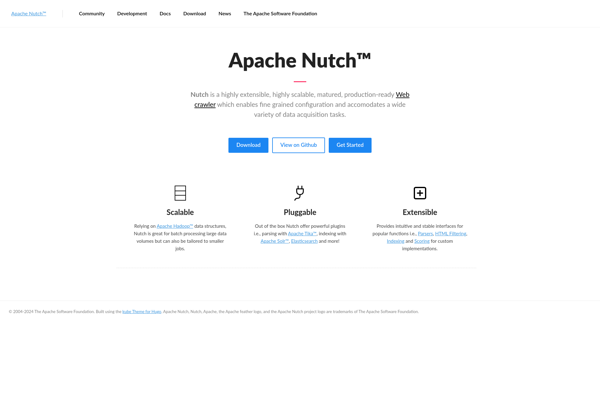

Heritrix

Heritrix is an open-source web crawler software project that was originally developed by the Internet Archive. It is designed to systematically browse and archive web pages by recursively following hyperlinks and storing the content in the WARC file format.Some key features of Heritrix include:Extensible and modular architecture based on Apache...