StormCrawler

StormCrawler: Open Source Web Crawler

Effortlessly crawl large websites with Apache Storm, featuring fault tolerance and seamless integration with other Storm components.

What is StormCrawler?

StormCrawler is an open source distributed web crawler that is designed to crawl very large websites quickly by scaling horizontally. It is built on top of Apache Storm, a distributed real-time computation system, which allows StormCrawler to be highly scalable and fault-tolerant.

Some key features of StormCrawler include:

- Horizontal scaling - By leveraging Apache Storm, StormCrawler can scale to very large websites by adding more resources and crawl instances.

- Fault tolerance - Storm provides guaranteed message processing, which means if a crawl instance goes down, no data will be lost.

- Extensibility - StormCrawler implements clear extension points and abstraction layers allowing custom implementations for fetching, parsing, indexing, etc.

- Ease of configuration - Simple YAML config files define the crawl scope, scheduling, output targets like Elasticsearch.

- Real-time processing - Crawl results can be processed in real-time by integrating other Storm components for tasks like machine learning or NLP.

StormCrawler is designed to crawl complex sites and sitemaps efficiently without overloading targets. It respects robots.txt and has built-in throttling. Typical uses cases include search engine indexing, machine learning datasets, and archiving. With horizontal scalability and fault tolerance, StormCrawler provides a robust platform for large scale web crawling.

StormCrawler Features

Features

- Distributed web crawling

- Fault tolerant

- Horizontally scalable

- Integrates with other Apache Storm components

- Configurable politeness policies

- Supports parsing and indexing

- APIs for feed injection

Pricing

- Open Source

Pros

Cons

Official Links

Reviews & Ratings

Login to ReviewThe Best StormCrawler Alternatives

Top Development and Web Crawling & Scraping and other similar apps like StormCrawler

Here are some alternatives to StormCrawler:

Suggest an alternative ❐Scrapy

Crawlbase

Apache Nutch

Lookyloo

Mixnode

Heritrix

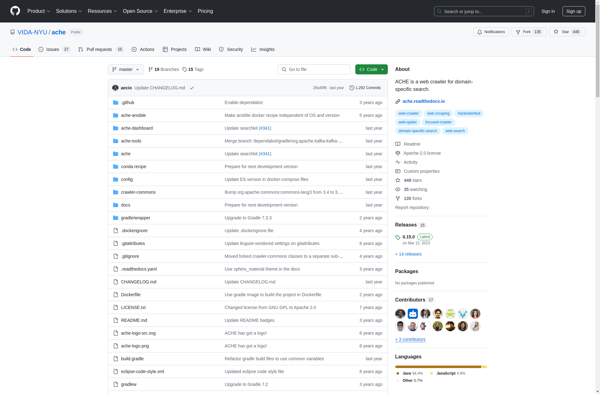

ACHE Crawler