Amazon Kinesis

Amazon Kinesis: Real-Time Data Ingestion & Processing Service

A managed service allowing real-time streaming data ingestion, processing, and routing to multiple endpoints from diverse sources.

What is Amazon Kinesis?

Amazon Kinesis is a cloud-based managed service offered by Amazon Web Services (AWS) to allow for real-time streaming data ingestion and processing. It is designed to easily ingest and process high volumes of streaming data from multiple sources simultaneously, making it well-suited for real-time analytics and big data workloads.

Some key capabilities and benefits of Amazon Kinesis include:

- Scalable data streams that can ingest gigabytes of data per second from hundreds of thousands of sources

- Real-time processing of streaming data as soon as it arrives to enable near-instant analytics and insights

- Customizable data processing through Kinesis Data Analytics and other AWS analytics services

- Easy integration with a variety of data sources like web/mobile apps, IoT devices, and more through Kinesis agents and producers

- Durable storage of streaming data for later replay and reprocessing needs

- High availability and durability built in to handle data streams 24/7

Amazon Kinesis integrates closely with other AWS services like S3, Redshift, and Lambda to provide a complete platform for streaming data intake, processing, analysis, and storage. The service handles the underlying infrastructure to simplify real-time analytics at any scale.

Amazon Kinesis Features

Features

- Real-time data streaming

- Scalable data ingestion

- Data processing through Kinesis Data Analytics

- Integration with other AWS services

- Serverless management

- Data replay capability

Pricing

- Pay-As-You-Go

Pros

Cons

Official Links

Reviews & Ratings

Login to ReviewThe Best Amazon Kinesis Alternatives

Top Ai Tools & Services and Data Streaming and other similar apps like Amazon Kinesis

Here are some alternatives to Amazon Kinesis:

Suggest an alternative ❐Talend

Databricks

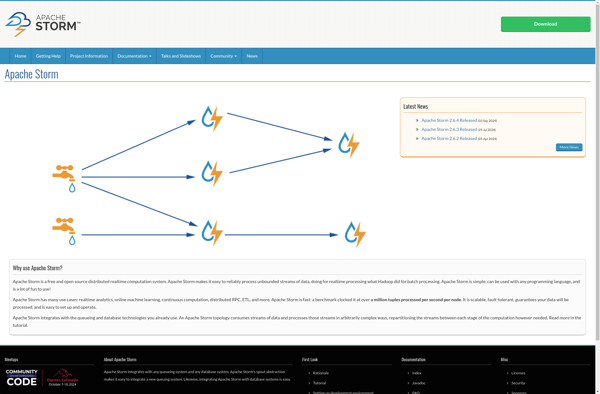

Apache Storm

StreamSets

Apache Hadoop

Apache Spark

Disco MapReduce

Apache Beam