Apache Hadoop

Apache Hadoop: Open Source Big Data Framework

Apache Hadoop is an open source framework for storing and processing big data in a distributed computing environment. It provides massive storage and high bandwidth data processing across clusters of computers.

What is Apache Hadoop?

Apache Hadoop is an open source software framework for distributed storage and distributed processing of very large data sets on computer clusters. Hadoop was created by the Apache Software Foundation and is written in Java.

Some key capabilities and features of Hadoop include:

- Massive scale - Hadoop enables distributed processing of massive amounts of data across clusters made up of commodity hardware.

- Fault tolerance - Data and application processing is redundantly distributed across the cluster so that failures of individual computers does not result in loss of data or interrupt application processing.

- Flexibility - New nodes can be added as needed and Hadoop will automatically distribute data and processing across the new nodes.

- Low cost - Hadoop runs on commodity hardware reducing capital expenditure and operational costs compared to traditional data warehousing solutions.

- Variety of data sources - Hadoop allows ingestion of structured and unstructured data from a wide variety of sources.

Some common uses cases of Hadoop include log file analysis, social media analytics, financial analytics, media analytics, risk modeling, recommendation systems, fraud detection, and more.

Overall, Apache Hadoop enables cost-effective and scalable data processing for big data applications across a cluster of computers.

Apache Hadoop Features

Features

- Distributed storage and processing of large datasets

- Fault tolerance

- Scalability

- Flexibility

- Cost effectiveness

Pricing

- Open Source

Pros

Cons

Official Links

Reviews & Ratings

Login to ReviewThe Best Apache Hadoop Alternatives

Top Ai Tools & Services and Big Data and other similar apps like Apache Hadoop

Here are some alternatives to Apache Hadoop:

Suggest an alternative ❐Amazon Kinesis

Apache Flink

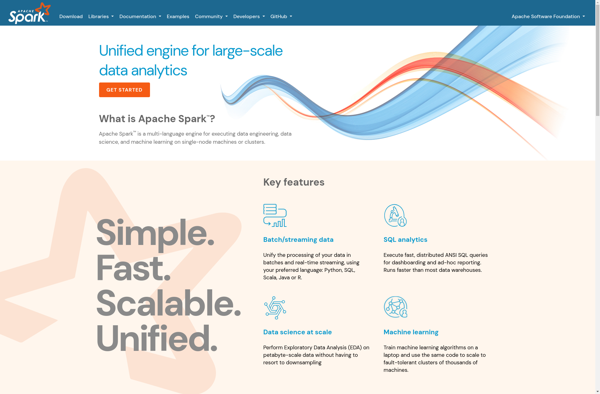

Apache Spark

Dispy

Disco MapReduce

Upsolver