Disco MapReduce

Disco MapReduce: Open-Source Distributed Computing Framework

An open-source MapReduce framework for distributed computing of large data sets on clusters of commodity hardware, featuring fault tolerance, automatic parallelization, and job monitoring.

What is Disco MapReduce?

Disco is an open-source MapReduce framework originally developed by Nokia for distributing the computing workloads of extremely large data sets across clusters of commodity hardware. It is designed to be scalable, fault-tolerant and easy to use.

Some key features of Disco MapReduce include:

- Automatic parallelization and distribution of MapReduce jobs

- Fault tolerance - automatic retry of failed jobs

- Support for different storage systems like HDFS, Amazon S3

- Web-based job monitoring and control interface

- Lightweight Python programming interface

- Batch-oriented and stream-oriented MapReduce interfaces

Disco can handle very large data sets in the order of petabytes and scale to thousands of nodes. It has been used at Nokia for data-intensive processing use cases like clickstream analysis, data mining and machine learning.

Overall, Disco MapReduce provides a good open-source alternative to commercial solutions like Amazon EMR, with additional flexibility to run Disco on private cloud infrastructure.

Disco MapReduce Features

Features

- MapReduce framework for distributed data processing

- Built-in fault tolerance

- Automatic parallelization

- Job monitoring and management

- Optimized for commodity hardware clusters

- Python API for MapReduce job creation

Pricing

- Open Source

Pros

Cons

Official Links

Reviews & Ratings

Login to ReviewThe Best Disco MapReduce Alternatives

Top Ai Tools & Services and Data Processing & Analytics and other similar apps like Disco MapReduce

Here are some alternatives to Disco MapReduce:

Suggest an alternative ❐Amazon Kinesis

Apache Hadoop

Apache Flink

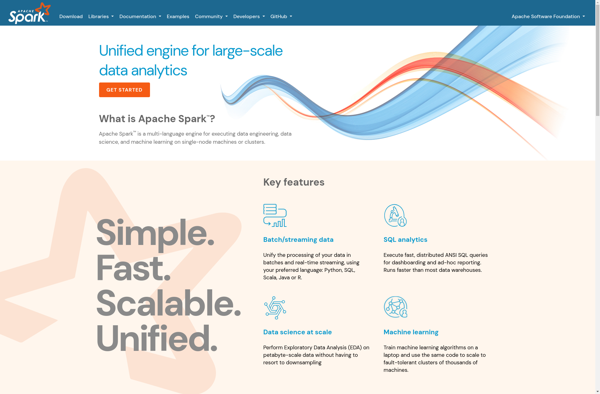

Apache Spark

Dispy