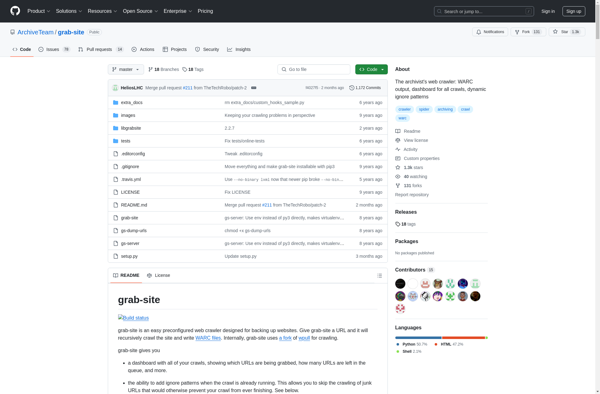

Grab-site

Grab-site: Website Copier and Downloader Tool

Grab-site is a website copier and downloader tool that allows you to easily copy entire websites locally for offline browsing or archiving purposes. It can download a site's HTML, images, CSS, JS, and other assets.

What is Grab-site?

Grab-site is a powerful yet easy-to-use website copier and downloader tool. It allows you to copy entire websites, including all HTML pages, images, JavaScript files, CSS stylesheets, and other assets, onto your local computer for offline browsing and archiving.

Some key features of Grab-site include:

- Preserves all links and website structure for seamless offline access

- Downloads a site hundreds of times faster than manually saving each page

- Has configurable depth and URL filters for fine-grained downloading control

- Supports resuming broken downloads and batch downloading

- Users can set rate limits and restrictions to avoid overloading sites

- Offers multi-threading and caching for maximum speed

Grab-site is perfect for web developers who want an easy way to test sites locally, digital archivists and researchers who need reliable backups of websites, and anyone who needs offline access to sites for periods of limited or no internet connectivity. With its intuitive interface and advanced options, Grab-site makes copying entire websites for offline use effortless.

Grab-site Features

Features

- Download entire websites for offline browsing

- Save websites as local HTML files

- Download images, CSS, JavaScript, and other assets

- Supports recursive downloading of linked pages

- Customizable download options (e.g., depth, file types)

- Ability to exclude certain files or directories from the download

- Multithreaded downloading for faster site mirroring

- Supports various URL schemes (HTTP, HTTPS, FTP)

Pricing

- Open Source

Pros

Cons

Official Links

Reviews & Ratings

Login to ReviewThe Best Grab-site Alternatives

Top Web Browsers and Website Copiers and other similar apps like Grab-site

Here are some alternatives to Grab-site:

Suggest an alternative ❐Wget

HTTrack

SiteSucker

WebCopy

WebSiteSniffer

WebCopier

ScrapBook X

WebScrapBook

Offline Pages Pro

SurfOffline

Mixnode

SitePuller

Fossilo

PageFreezer

Web Dumper

WebsiteToZip

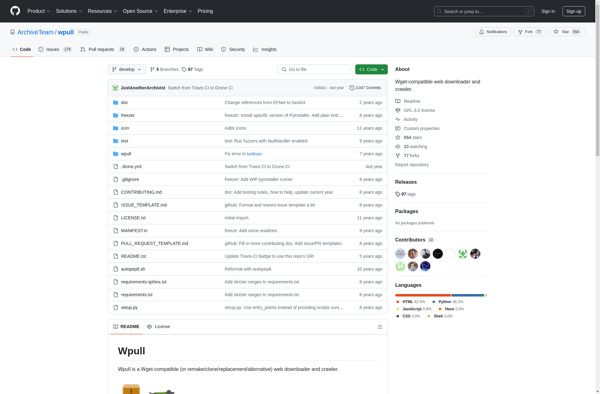

Wpull