HTTrack

HTTrack: Open Source Website Copier and Offline Browser

Download a website to a local directory, building recursively all directories, getting HTML, images, and other files from the server to their computer.

What is HTTrack?

HTTrack is an open source offline browser utility, which allows you to download a website from the Internet to a local directory. It recursively retrieves all the necessary files from the server to your computer, including HTML, images, and other media files, in order to browse the website offline without any internet connection.

It's useful for downloading websites you want to archive or reference later without having to connect online. The software works by spidering or crawling all the links on a target domain you specify and downloads all content locally. It will rebuild the directory structure of the site so you can easily browse offline. The benefits are not having to rely on your internet connection or servers being online/available. You also can archive entire websites for personal use or records.

Some key features of HTTrack include easy downloading/mirroring of websites, ability to update existing mirrors to refresh content, media filters to control what filetypes get retrieved, support for proxies for privacy/anonymity, and customization of crawl depth. It is available on Windows, Linux, and Mac OS platforms. Overall, it's one of the most mature and full-featured offline browsers to replicate websites locally.

HTTrack Features

Features

- Offline browsing and web mirroring

- Recursive website downloading

- Customizable download options

- Supports various file types including HTML, images, CSS, JavaScript, etc.

- Multilingual interface

- Ability to resume interrupted downloads

- Scheduling and automated website updates

Pricing

- Open Source

Pros

Cons

Official Links

Reviews & Ratings

Login to ReviewThe Best HTTrack Alternatives

Top Web Browsers and Website Copiers and other similar apps like HTTrack

Here are some alternatives to HTTrack:

Suggest an alternative ❐Wget

SiteSucker

WebCopy

Website Downloader

Website Copier Online Free

Teleport Pro

Web Downloader (Chrome Extension)

Offline Explorer

Website Ripper Copier

ScrapBook

Save Page WE

ArchiveBox

Web2disk

WebCopier

WebZip

WebReaper

SiteCrawler

HTTP Ripper

ScrapBook X

Grab-site

Darcy Ripper

WebScrapBook

Offline Pages Pro

PageArchiver

SurfOffline

Webrecorder

Site Snatcher

SitePuller

NCollector Studio

BlackWidow

PageNest

Fossilo

ItSucks

Wysigot

PageFreezer

Web Dumper

WebsiteToZip

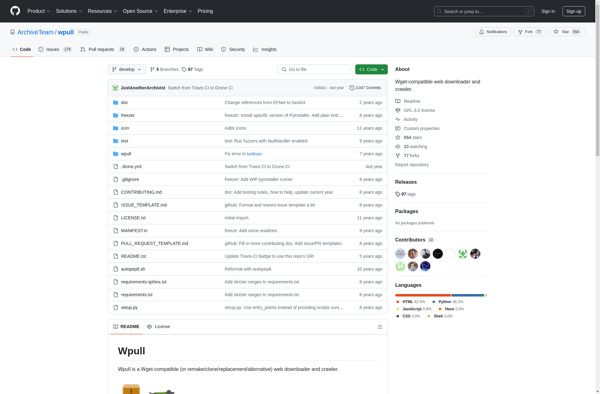

Wpull

WinWSD