Website Downloader

Website Downloader: Download Entire Websites Locally

A software that allows you to download entire websites locally to your computer, preserving the website's structure and assets for offline browsing.

What is Website Downloader?

Website Downloader is a desktop software that gives you the ability to download websites from the internet onto your local computer or device. It retrieves all the HTML pages, images, CSS stylesheets, Javascript files, PDFs and other assets that make up a website so you can browse the site offline.

Some key features of Website Downloader include:

- Preserves the original structure and styling of websites for offline browsing

- Allows adjustable download depths - download just the home page or the entire site

- Respects robots.txt rules

- Downloads text, images, scripts, multimedia and other file types

- Configurable filters and rules to control what gets downloaded

- Monitoring and reporting tools

- Easy to use with step-by-step wizards

- Useful for web developers, researchers, digital archives and readers who want saved versions of sites

With Website Downloader you can save copies of important sites before they get updated or deleted. It gives you a local backup for reliability and privacy. The downloaded websites remain browsable without needing an internet connection.

Website Downloader Features

Features

- Download entire websites

- Preserve original site structure

- Download website assets like images, CSS, JavaScript

- Configure download settings like max download depth

- Resume interrupted downloads

- Site map visualization

- Batch downloading multiple sites

Pricing

- Free

- Freemium

Pros

Cons

Official Links

Reviews & Ratings

Login to ReviewThe Best Website Downloader Alternatives

Top Web Browsers and Offline Web Browsing and other similar apps like Website Downloader

Here are some alternatives to Website Downloader:

Suggest an alternative ❐HTTrack

SiteSucker

WebCopy

Teleport Pro

Web Downloader (Chrome Extension)

Offline Explorer

Website Ripper Copier

ScrapBook

WebSiteSniffer

WebCopier

WebZip

WebReaper

SiteCrawler

HTTP Ripper

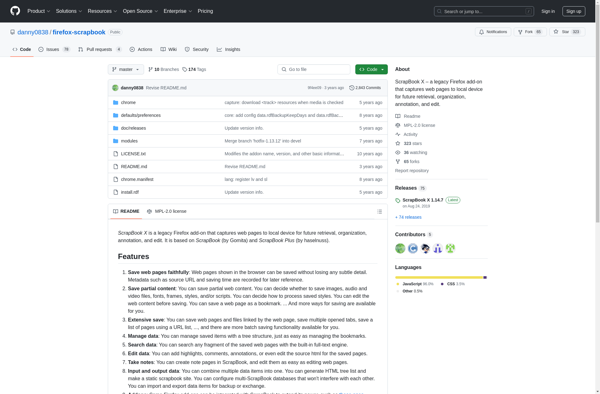

ScrapBook X

Darcy Ripper

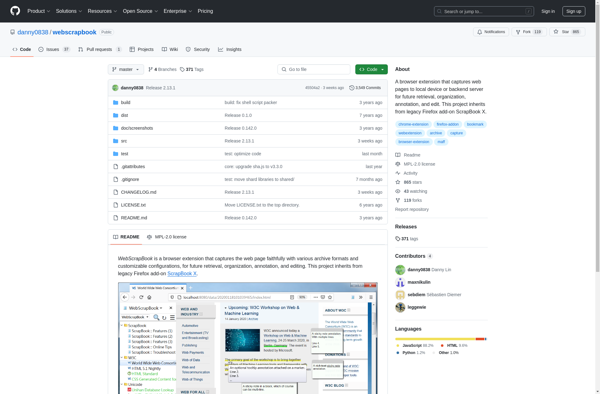

WebScrapBook

Offline Pages Pro

PageArchiver

SurfOffline

Site Snatcher

SitePuller

NCollector Studio

BlackWidow

PageNest

Fossilo

ItSucks

Wysigot

PageFreezer